Interactivity

Interactivity is your chance to fully engage at a personal level by seeing, touching, squeezing, hearing or even smelling interactive visions for the future. There are two sets of interactive demonstration exhibits this year: Interactivity 1 and Interactivity 2.Descriptions of these interactive demonstrations follow the locations and hours listed below.

Jan Borchers, RWTH Aachen UniversityLyn Bartram, Simon Fraser University

Sid Fels, University of British Columbia

Locations:

Interactivity 1

Commons (Ballroom C/D, Level 1)

Interactivity 2

Rooms 02/203/204 (Level 2)

Level 2 Foyer (in addition on Tuesday evening)

Hours:

Monday

17:30-19:30 (Interactivity 1 only)

Tuesday

10:00-11:00 (Interactivity 1 & 2)

15:20-16:00 (Interactivity 1 & 2)

17:30 - 20:00 (Interactivity 2 only)

Wednesday

10:00-11:00 (Interactivity 1 & 2)

15:20-16:00 (Interactivity 1 & 2)

Thursday

10:00-11:00 (Interactivity 1 & 2)

Interactivity 1 [Exhibit Hall]

| Ubiquitous Voice Synthesis: Interactive Manipulation of Speech and Singing on Mobile Distributed Platforms Vocal production is one of the most ubiquitous and expressive activities of people, yet understanding its production and synthesis remains elusive. When vocal synthesis is elevated to include new forms of singing and sound production, fundamental changes to culture and musical expression emerge. Nowadays, Text-To-Speech (TTS) synthesis seems unable to suggest innovative solutions for new computing trends, such as mobility, interactivity, ubiquitous computing or expressive manipulation. In this paper, we describe our pioneering work in developing interactive voice synthesis beyond the TTS paradigm. We present DiVA and HandSketch as our two current voice-based digital musical instruments. We then discuss the evolution of this performance practice into a new ubiquitous model applied to voice synthesis, and we describe our first prototype using a mobile phone and wireless embodied devices in order to allow a group of users to collaboratively produce voice synthesis in real-time. Contact: Nicolas d'Alessandro, nda@magic.ubc.ca |

| Snaplet: Using Body Shape to Inform Function in Mobile Flexible Display Devices With recent advances in flexible displays, computer displays are no longer restricted to flat, rigid form factors. In this paper, we propose that the physical form of a flexible display, depending on the way it is held or worn, can help shape its current functionality. We propose Snaplet, a wearable flexible e-ink display augmented with sensors that allow the shape of the display to be detected. Snaplet is a paper computer in the form of a bracelet. When in a convex shape on the wrist, Snaplet functions as a watch and media player. When held flat in the hand it is a PDA with notepad functionality. When held in a concave shape Snaplet functions as a phone. Calls are dropped by returning its shape to a flat or convex shape. Contact: Roel Vertegaal, roel@cs.queensu.ca |  |

| MudPad: Tactile Feedback for Touch Surfaces MudPad is a system enriching touch surfaces with localized active haptic feedback. A soft and flexible overlay containing magnetorheological fluid is actuated by an array of electromagnets to create a broad variety of tactile sensations. As each magnet can be controlled individually, we are able to produce feedback locally at arbitrary points of interaction. Contact: Yvonne Jansen, yvonne@cs.rwth-aachen.de |

| i*Chameleon: A Scalable and Extensible Framework for Multimodal Interaction i*Chameleon is a multimodal interaction framework that enables programmers to readily prototype and test new interactive devices or interaction modes. It allows users to customize their own desktop environment for interaction beyond the usual KVM devices, which would be particularly useful for users with difficulty using the keyboard and mouse, or for systems deployed in specialized environments. This is made possible with the engineering of an interaction framework that distills the complexity of control processing to a set of semantically-rich modal controls that are discoverable, composable and adaptable. The framework can also be used for developing new applications with multimodal interactions, for example, distributed applications in collaborative environments or robot control. Contact: Wai Wa Tang, will.tang.hk@gmail.com |  |

| Immersive VR: A Non-pharmacological Analgesic for Chronic Pain? This paper describes the research work being carried out by the Transforming Pain Research Group – the only group whose work is entirely focused on the use of immersive VR for chronic pain management. Unlike VR research for acute or short-term pain, which relies on pain "distraction," this research posits a new paradigm for the use of VR. In addition to providing an overview of our work, the present paper also describes one of our current works in detail: the Virtual Meditative Walk. Contact: Meehae Song, meehaes@sfu.ca |

| Tactile Display for Blind Persons Using TeslaTouch TeslaTouch is a technology that provides tactile sensation to fingers moving on a touch panel. We have developed applications based on TeslaTouch for blind users to interpret and create 2D tactile information. We demonstrate these applications and show its potential to support communication among blind individuals. Contact: Cheng Xu, chengx.cn@gmail.com |  |

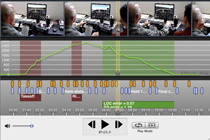

| ChronoViz: A system for supporting navigation of time-coded data We present ChronoViz, a system to aid annotation, visualization, navigation, and analysis of multimodal time-coded data. Exploiting interactive paper technology, ChronoViz also integrates researcher's paper notes into the composite data set. Researchers can navigate data such as video, time-series data, and geographic position in multiple ways, taking advantage of synchronized visualizations and annotations. Paper notes can also be used as a navigation tool, enabling a researcher to control their interaction with the data set with a wireless digital pen and an interactive version of their paper notes. Contact: Adam Fouse, afouse@cogsci.ucsd.edu |

| TagURIt: A Proximity-based Game of Tag Using Lumalive e-Textile Displays We present an electronic game of tag that uses proximity sensing and Lumalive displays on garments. In our game of tag, each player physically represents a location-tagged Universal Resource Indicator (URI). The URIs, one chaser and two target players, wear touch-sensitive Lumalive display shirts. The goal of the game is for the chaser to capture a token displayed on one of the Lumalive shirts, by pressing a touch sensor located on the shirt. When the chaser is in close proximity to the token player, the token jumps to the shirt of the second closest player, making this children’s game more challenging for adult players. Our system demonstrates the use of interactive e-textile displays to remove the technological barrier between contact and proximity in the real world, and the seamless representation of gaming information from the virtual world in that real world. Contact: Roel Vertegaal, roel@cs.queensu.ca |  |

Interactivity 2 [Room 202-204]

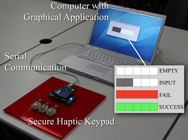

| Obfuscating Authentication Through Haptics, Sound and Light Sensitive digital content associated with or owned by individuals now pervades everyday life. Mediating accessing to it in ways that are usable and secure is an ongoing challenge. This paper briefly discusses a series of five PIN entry and transmission systems that address observation attacks in public spaces via shoulder surfing or camera recording. They do this through the use of novel modalities including audio cues, haptic cues and modulated visible light. Each prototype is introduced and motivated, and its strengths and weaknesses are considered. The paper closes with a general discussion of the relevance of this work and the upcoming issues it faces. Contact: Andrea Bianchi, andrea.whites@gmail.com |

| ZeroTouch: A Zero-Thickness Optical Multi-Touch Force Field We present zero-thickness optical multi-touch sensing, a technique that simplifies sensor/display integration, and enables new forms of interaction not previously possible with other multi-touch sensing techniques. Using low-cost modulated infrared sensors to quickly determine the visual hull of an interactive area, we enable robust real-time sensing of fingers and hands, even in the presence of strong ambient lighting. Our technology allows for 20+ fingers to be detected, many more than through prior visual hull techniques, and our use of wide-angle optoelectonics allows for excellent touch resolution, even in the corners of the sensor. With the ability to track objects in free space, as well as its use as a traditional multi-touch sensor, ZeroTouch opens up a new world of interaction possibilities. Contact: Jonathan Moeller, jmoeller@gmail.com |  |

| MediaDiver: Viewing and Annotating Multi-View Video We propose to bring our novel rich media interface called MediaDiver demonstrating our new interaction techniques for viewing and annotating multiple view video. The demonstration allows attendees to experience novel moving target selection methods (called Hold and Chase), new multi-view selection techniques, automated quality of view analysis to switch viewpoints to follow targets, integrated annotation methods for viewing or authoring meta-content and advanced context sensitive transport and timeline functions. As users have become increasingly sophisticated when managing navigation and viewing of hyper-documents, they transfer their expectations to new media. Our proposal is a demonstration of the technology required to meet these expectations for video. Thus users will be able to directly click on objects in the video to link to more information or other video, easily change camera views and mark-up the video with their own content. The applications of this technology stretch from home video management to broadcast quality media production, which may be consumed on both desktop and mobile platforms. Contact: Gregor Miller, gregor@ece.ubc.ca |

| Blinky Blocks: A Physical Ensemble Programming Platform A major impediment to understanding programmable matter is the lack of an existing system with sufficiently many modules of sufficient capabilities. In this paper we describe the requirements of physically distributed ensembles and discuss the use of the distributed programming language Meld to program ensembles of these units. We demonstrate a new system designed to meet these requirements called Blinky Blocks and discuss the hardware design we used to create 100 of these modules. Contact: Brian Kirby, bkirby@andrew.cmu.edu |  |

| Frictional Widgets: Enhancing Touch Interfaces with Programmable Friction Touch interactions occur through flat surfaces that lack the tactile richness of physical interfaces. We explore the design possibilities offered by augmenting touchscreens with programmable surface friction. Four exemplar applications – an alarm clock, a file manager, a game, and a text editor – demonstrate tactile effects that improve touch interactions by enhancing physicality, performance, and subjective satisfaction. Contact: Vincent Levesque, vlev@cs.ubc.ca |

| Touch and Copy, Touch and Paste SPARSH explores a novel interaction method to seamlessly transfer data between digital devices in a fun and intuitive way. The user touches whatever data item he or she wants to copy from a device. At that moment, the data item is conceptually saved in the user. Next, the user touches the other device he or she wants to paste/pass the saved content into. SPARSH uses touch-based interactions as indications for what to copy and where to pass it. Technically, the actual transfer of media happens via the information cloud. Contact: Pranav Mistry, pranav@mit.edu |  |

| Mouseless - a Computer Mouse as Small as Invisible Mouseless proposes a novel input device that provides the familiarity of interaction of a physical computer mouse without requiring a real hardware mouse. In this short paper, we present the design and implementation of various Mouseless prototype systems, which basically consist of an infrared (IR) laser and a webcam. We also discuss novel gestural interactions supported by Mouseless. We end the paper with a brief discussion on the limitations and future possibilities of the presented Mouseless prototypes. Contact: Pranav Mistry, pranav@mit.edu |

| Galvanic Skin Response-Derived Bookmarking of an Audio Stream We demonstrate a novel interaction paradigm driven by implicit, low-attention user control, accomplished by monitoring a user’s physiological state. We have designed and prototyped this interaction for a first use case of bookmarking an audio stream, to holistically explore the implicit interaction concept. A listener’s galvanic skin conductance (GSR) is monitored for orienting responses (ORs) to external interruptions; our research prototype then automatically bookmarks the media such that the user can attend to the interruption, then resume listening from the point he/she is interrupted. Contact: Matthew Pan, mpan9@interchange.ubc.ca |  |

| 3D-Press 3D-Press is a technique to create the haptic illusion of pressing on a compliant surface, when the surface pressed is actually rigid. The user applies force on a hard, rigid surface and feels on the hand that the point of contact penetrates the material, with a defined mechanical behavior. The user can try different mechanical behaviors, resembling a spring-mounted button, foam, rubber, sand… The illusion is haptic only, created combining force sensing and vibrotactile actuation. It does not require any vision or audio to be experienced, but these can further enhance the experience. Contact: Johan Kildal, johankildal@gmail.com |

| RayMatic: Ambient meter display with facial expression and gesture This paper presents the concepts for an experimental product that moves beyond conventional, number-based interface. It explores the way of approach to the engaging and emotional human-computer interaction through the use of facial expression and gesture. Using sensors and touch technology, the ordinary picture frame becomes an interactive meter display and conveys environmental information in non-intrusive way as an ambient display. Contact: Ray Yun, salight105@gmail.com |  |

| Snow Globe: A Spherical Fish-Tank VR Display In this paper, we present a spherical display with Fish-Tank VR as a means for interacting with three-dimensional objects. We implemented the spherical display by reflecting a projected image off a hemispherical mirror, allowing for a seamless curvilinear display surface. Diffuse illumination is used for detecting touch points on the sphere. The user’s head position and the position of the sphere are also tracked using a Vicon motion capture device. Users can perform multi-touch gestures to interact with 3D content on the spherical display. Our system relies on the metaphor of a snow globe. Users can walk around a display while maintaining motion parallax corrected viewpoints of the object on the display. They can interact with the 3D object using multitouch interaction techniques, allowing for rotating and scaling of the 3D model on the display. Contact: Roel Vertegaal, roel@cs.queensu.ca |

| INVISQUE: Intuitive Information Exploration through Interactive Visualization In this paper we present INVISQUE, a novel system designed for interactive information exploration. Instead of a conventional list-style arrangement, in INVISQUE information is represented by a two-dimensional spatial canvas, with each dimension representing user-defined semantics. Search results are presented as index cards, ordered in both dimensions. Intuitive interactions are used to perform tasks such as keyword searching, results browsing, categorizing, and linking to online resources such as Google and Twitter. The interaction-based query style also naturally lends the system to different types of user input such as multi-touch gestures. As a result, INVISQUE gives users a much more intuitive and smooth experience of exploring large information spaces. Contact: Chris Rooney, c.rooney@mdx.ac.uk |  |

| Coco - The Therapy Robot Coco is a therapeutic robot designed for elderly people living in a nursing home. Coco accompanies and entertains its user throughout the day. Coco is intended to delight the user and accompany him throughout the day. Coco is not a replacement for human contact. It is rather a supplement. It has five main functions: reading, singing, calendar, quiz games and the random function. It can be operated by voice control, a remote control or buttons on its base. You can also pet Coco and it will give you a positive feedback and move its peak and wings. Contact: Katharina Tran phuc, kathatp@gmail.com |

Interactivity Special Performances

| What Does A Body Know? The Visual Voice Project is developing Digital Ventriloquized Actors (DiVAs) for use in artistic performance. DiVAs are performers able to use physical gestures to control a speech synthesizer. The physical gestures are tracked using glove sensors and a position sensor, allowing the performers to sing and speak using an artificial voice. In "What Does A Body Know?", DiVA performer Marguerite Witvoet performs a mono/dialog in which the character gradually "discovers" the DiVA voice during the performance, culminating in a "duet" between the character and the DiVA voice. Contact: Robert Pritchard, bob@interchange.ubc.ca |

| humanaquarium: exploring audience, participation, and interaction humanaquarium is a movable performance space designed to explore the dialogical relationship between artist and audience. Two musicians perform inside the cube-shaped box, collaborating with participants to co-create an aesthetic audio-visual experience. The front wall of the humanaquarium is a touch-sensitive FTIR window. Max/MSP is used to translate the locations of touches on the window into control data, manipulating the tracking of software synthesizers and audio effects generated in Ableton Live, and influencing a Jitter visualization projected upon the rear wall of the cube. Contact: Robyn Taylor, rltaylor@ualberta.ca |  |

| Graffito: Crowd-based Performative Interaction at Festivals Graffito is a multi-participant drawing application developed for the iPhone, iPad and iPod Touch that allows anyone to draw with anyone else, anywhere in the world. The application allows participants to draw digital graffiti in real time on a mobile phone by drawing on the mobile phone touch screen with their finger, or shaking the mobile phone. Since the mobile phones are networked together, multiple participants can draw graffiti at the same time. The drawings slowly fade out over the period of one minute, aimed at encouraging quick, lightweight contributions, providing continuous interaction opportunities. Contact: Jennifer Sheridan, jennifer@bigdoginteractive.co.uk |

© 2010 ACM SIGCHI

Contact Webmaster